Radiosity Baker

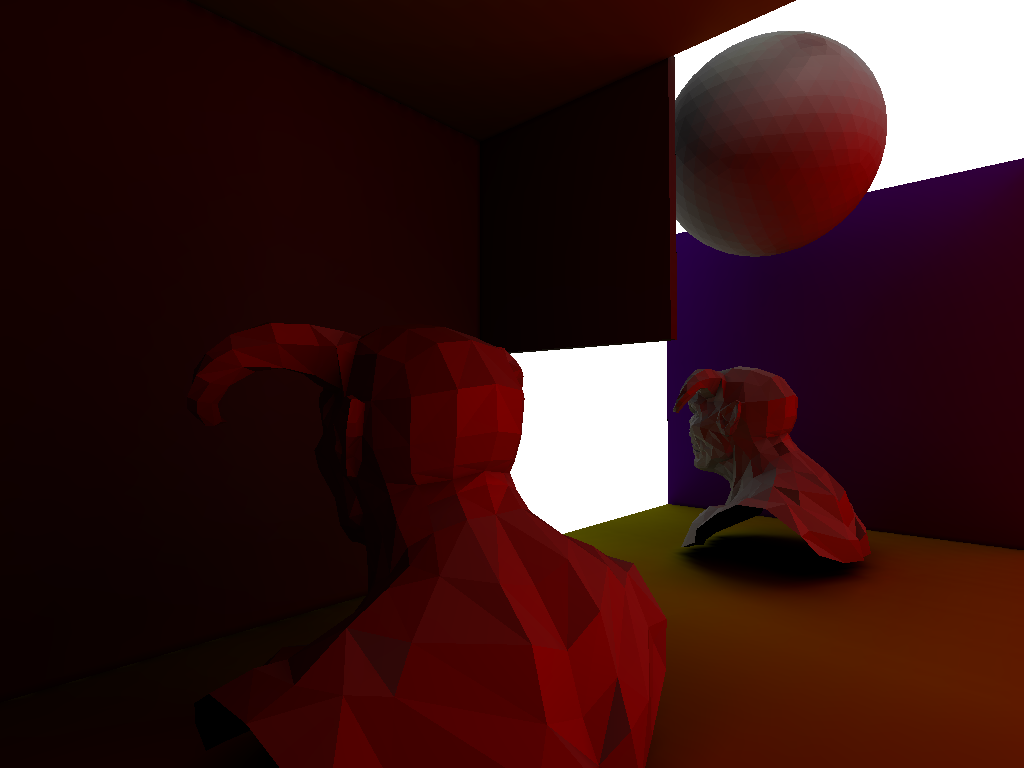

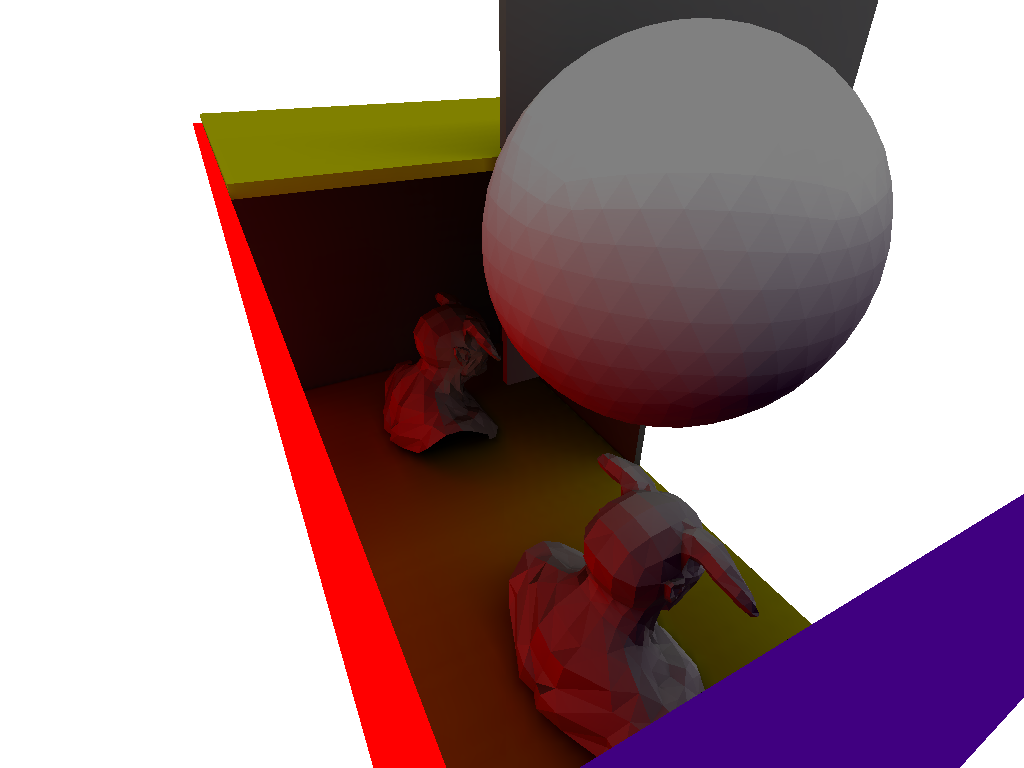

31 May 2014In my second post I want to talk about a small project i implemented in my fungine. Modern computer games achieve a high level of realism. One of the most important part of this realism is the lighting. In this example we see a textureless scene with 2 different lighting techniques:

Global Illumination (GI)

Normally a scene is rendered with rasterization by projecting vertices onto an image plane. With rasterizing you could render the image to the left by using shadow mapping (an approximation to real shadowing). GI on the other hand tries to achieve the image to the right by taking a more physically plausible approach: In nature a light source emits photons, which travel with the speed of light (in a vacuum) until they hit a surface:

By hitting a surface the energy of the photon is split into several parts:

- Diffuse: Think of the color of the material (some frequencies/colors of the light get absorbed by the surface). This part is view-invariant. No matter from which direction you look onto the surface, the color doesn't change.

- Specular: Think of a mirror (the entry angle is equal to the exit angle of the light source). The important thing is that this part is view-dependent.

- Heat: Some energy is absorbed by the material and converted into heat (we don't care about this part)

The diffuse and reflection part themselves act as new light sources which bounce around the scene hitting other surfaces. These complex interactions create the image on the right.

Radiosity

Radiosity is a subset of GI in the sense that it only takes diffuse-propagation into account. In the example above we can see that the red floor reflects some red light to the white walls, which makes the image more realistic. Because radiosity only works on the diffuse part it is also view-invariant, which means we can precalculate the radiosity of the whole scene offline and store it in a so called texture atlas (one texture for the whole scene). This allows us to have more computation time online for other rendering effects (post-processing effects, gpu particles, ..., you name it). This process is called "lightmapping" or "lightmap baking" and is an old trick popularized by the game Quake.

Algorithm

To implement this effect I followed this great but dated article. The basic idea is that you render the scene from every texel in the scene and update its color.

- start with a triangle soup (a large number of triangles)

- create a texture atlas by fitting all triangles sequentially into the texture. Every pixel \(p_i = (c_i,n_i,pos_i)\) contains 9 values

- 3 for the color \(c_i\)

- 3 for the normal \(n_i\)

- 3 for the interpolated position \(pos_i\)

- upload a black texture (the same size as the atlas) with pixels \(gpu_i\) to the GPU

- until convergence of all \(gpu_i\)

- for every pixel \(p_i\) in the texture atlas

- put the camera to the position \(pos_i\) facing the normal \(n_i\)

- render the scene with the GPU

- download the rendered image to the CPU

- \(avg:=\) the average of the rendered image in color space

- \(gpu_i:=c_i*avg*R\) , where \(R\) is the diffuse reflectivity and depends on the material

Implementation

My implementation is seperated into two files.

We first hand the GIUtil class a list of Bakeable objects (which is described by an array of triangles and a color).

The GIUtil creates an empty texture atlas lookup[TEXTURE_SIZE][TEXTURE_SIZE][9] and for every triangle of every Bakeable it:

- computes the normal of the triangle

- computes the uv coordinates of the triangle (

getProjectedTriangle()) finds a free space in the texture atlas where the uvs could fit

rasterizes the normals, interpolated position and color into the texture atlas

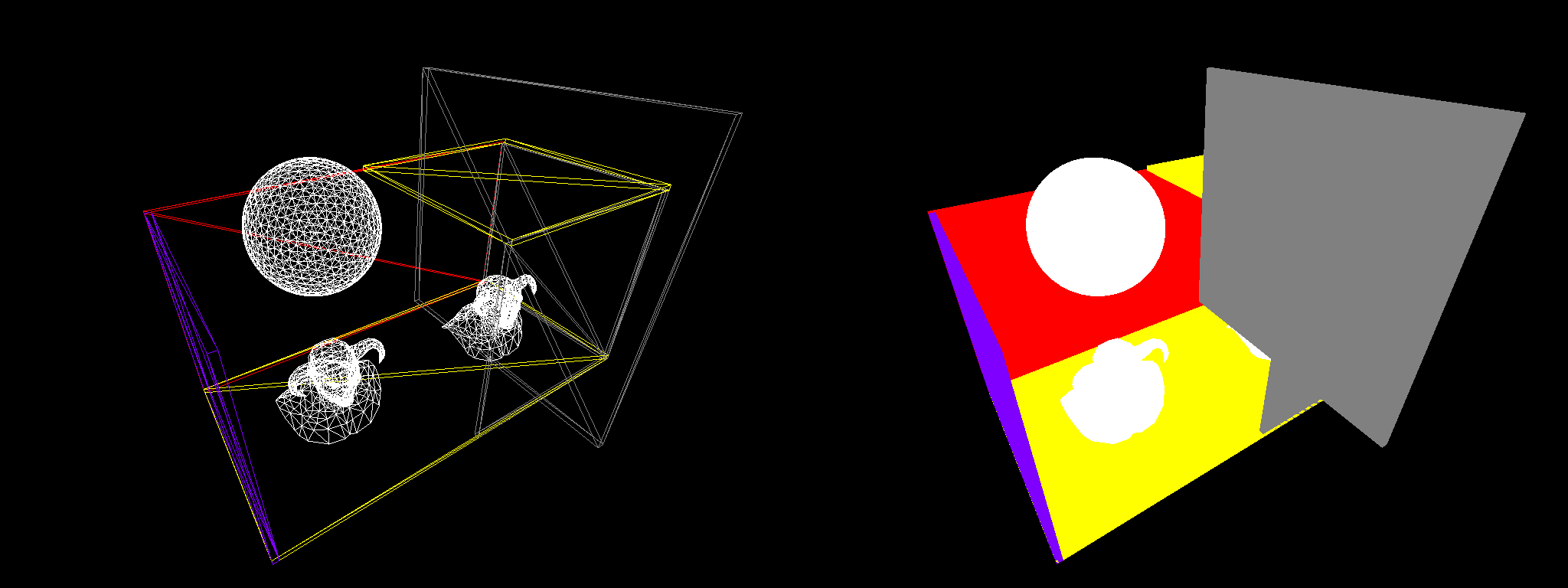

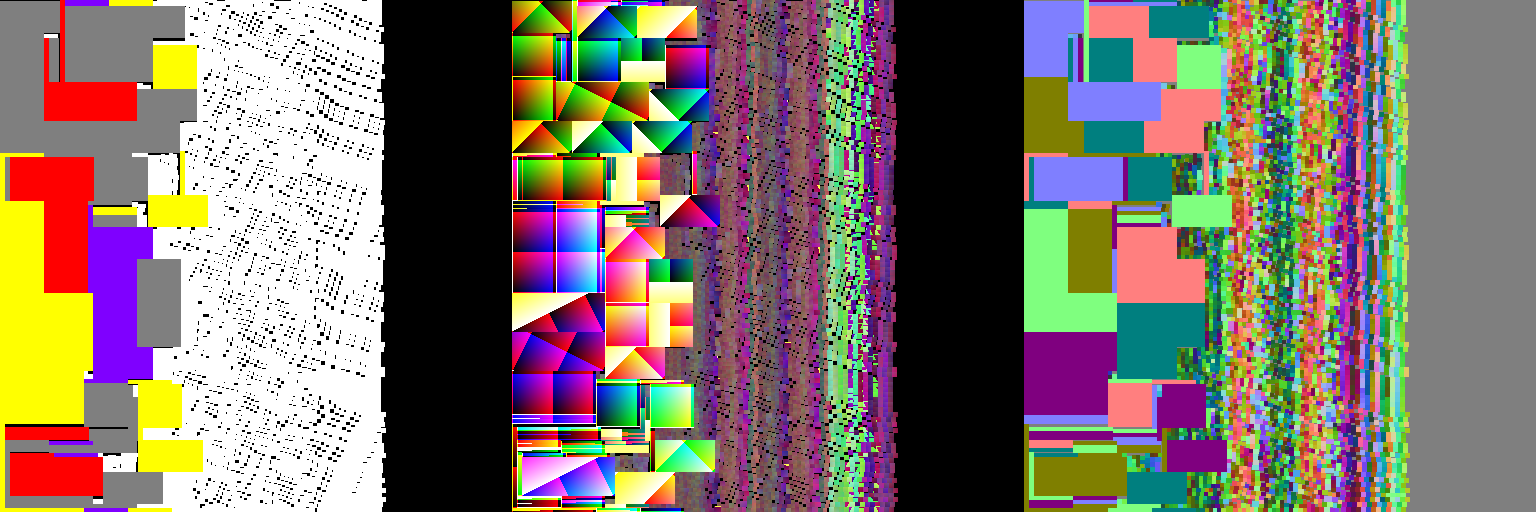

The resulting texture atlas (color,position,normal maps)

A black texture (except emmisive objects, in this case the red wall) and the vertices are sent to the GPU: uploadTexture(), sendToGPU()

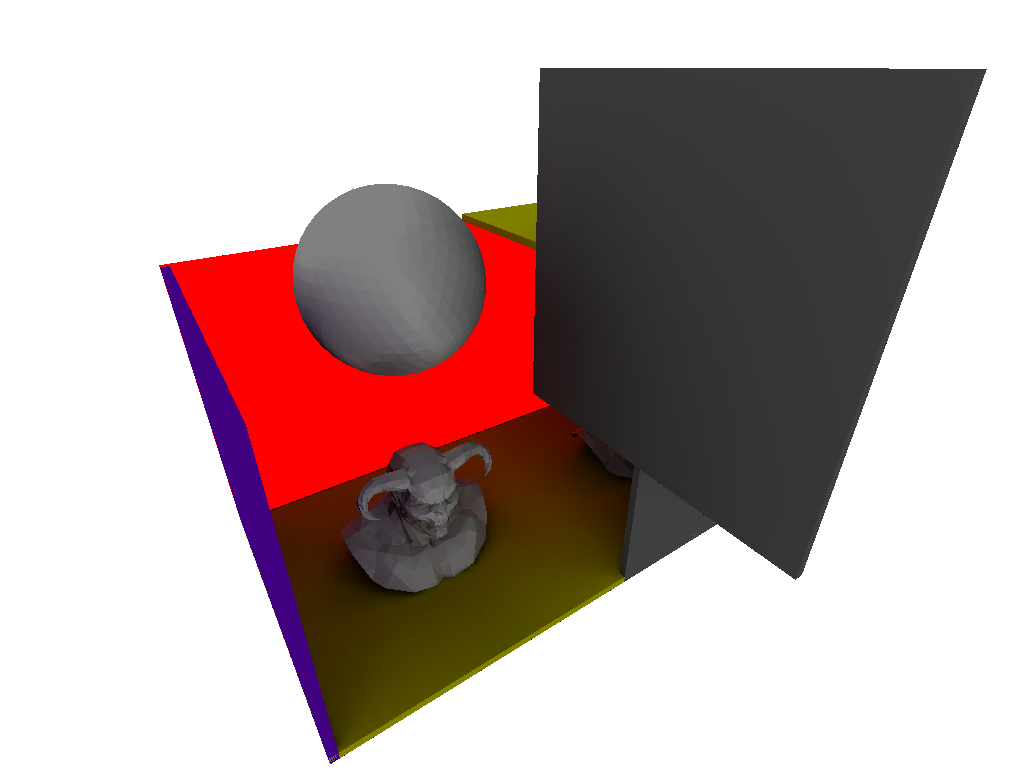

In the CPU we now visit every pixel in the texture atlas (except emmisive and empty pixels) and put the camera in the corresponding position/direction: renderFromLookup()

The scene is rendered into a framebuffer and the result is downloaded to the CPU:

copyTextureToCPU()Some renderings from the corresponding texels

We compute the average of the pixel intensities and compute the new radiosity term with

giUtil.radiosity()Finally we update the texture in the GPU with the result

Results

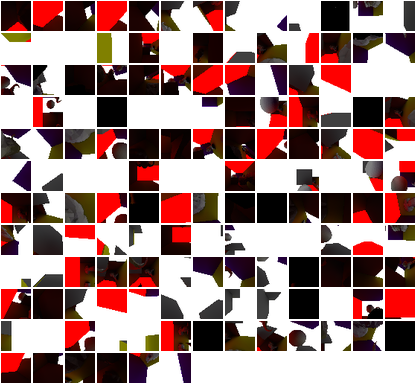

After 2-3 iterations the pixel intensities converge and we end up with something like this

Possible Improvements

Speed

This approach is very slow compared to other approaches that real baking solutions (like Maya or Blender) use, because we have to download the rendered texture to the CPU and send the result back to the GPU. Maybe a solution purely written with openCL/CUDA could compete with the normal solution of path tracing.

Quality

You may have noticed that the triangles are flat shaded. This happens because I don't interpolate the normals in the texture atlas at the moment. I was stuck implementing the interpolation, because it turned out more difficult than I excpected (I may write another post about this particular problem).

)](/static/radiosity/Radiosity_Comparison.jpg)

](/static/radiosity/diffuse_specular.png)